Up until now, it almost went without saying; to recognize a face, you had to be able to see the face. However, that’s all about to change thanks to the latest version CyberExtruder’s Aureus 3D face recognition software.

Consider the scenario when something happens, and the bad guys are completely masked like a bank robbery or terrorist attack. One of the best tools law enforcement officers have is face recognition software which can produce a list of potential suspects based on the image evidence they have. But the bad guys all know this, and often the evidence trail they leave behind includes images that are really poor quality and have large portions of the face obscured by dark glasses, hats, and even masks.

Because of this, it’s a common practice for the face recognition software to be asked to produce a list of the top 20, 50 or even 100 candidates. This may (or may not) begin to narrow the haystack in which they’re seeking a needle. Since the investigators will want to err on the side of being conservative, asking for a large list of potentials increase the likelihood that a ‘hit’ is somewhere in the pool of candidates.

From that large list of candidates, human experts then manually cull unlikely possibilities from the list of face recognition generated candidates. The human experts will pay close attention to facial details, or ancillary features. These features will include things like the shape of an ear lobe or the symmetry of a nose. Candidates with features the experts deem “definitely not the same” are removed. This approach can be a tedious and mind-numbing exercise that will tax the abilities of even the most seasoned experts.

At CyberExtruder we thought about this approach and wondered what we might be able to do to help in these types of situations. If the expert investigators were looking at the nuances of specific facial features to discriminate one image from another, maybe we would be able to do something similar.

The first challenge was recognizing that while there is information present that we want to use (Signal), there is also a great deal of information that was just not present in the first place or that we wanted to ignore anyway (Noise). In other words, the Signal to Noise ratio was very low.

We started our work by focusing on ears, the jawline, the nose and the eyes. Previous work we’d done for the US Army demonstrated that ears were a useful biometric because they are unique like fingerprints. We also recognized that when you had a clear view of an ear, you had a very poor picture of a person’s face – poor from the standpoint of being useful for face recognition.

We also thought about the spate of terroristic videos and images being published where the perpetrators would hide behind balaclavas or ski masks while performing for the camera. We figured if we could just focus on the limited amount of Signal available in the eye region and ignore the noise created by the mask we might be able to derive reasonable matching results.

Test protocol

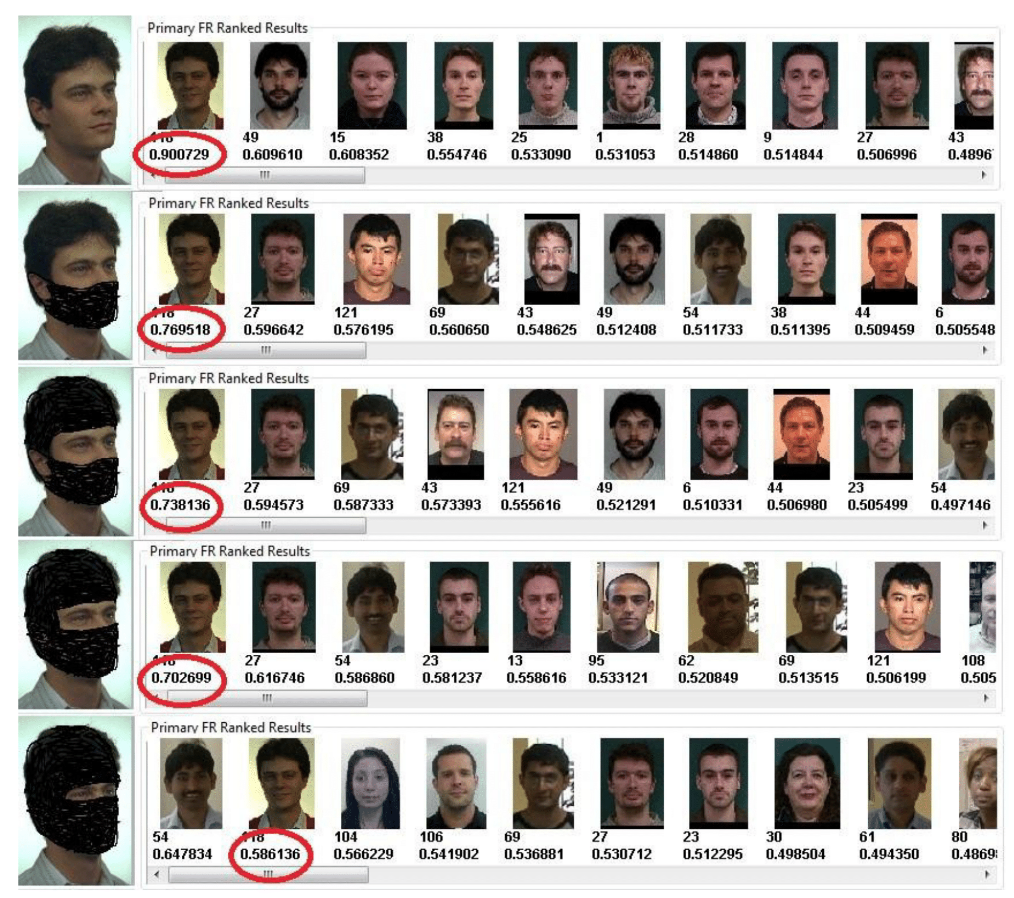

The image in Figure 2 below helps to visualize identification of people wearing masks we used the Aureus 3D v5.7 matcher and submitted probe images in progressively greater stages of masking ranging from small to large face coverage.

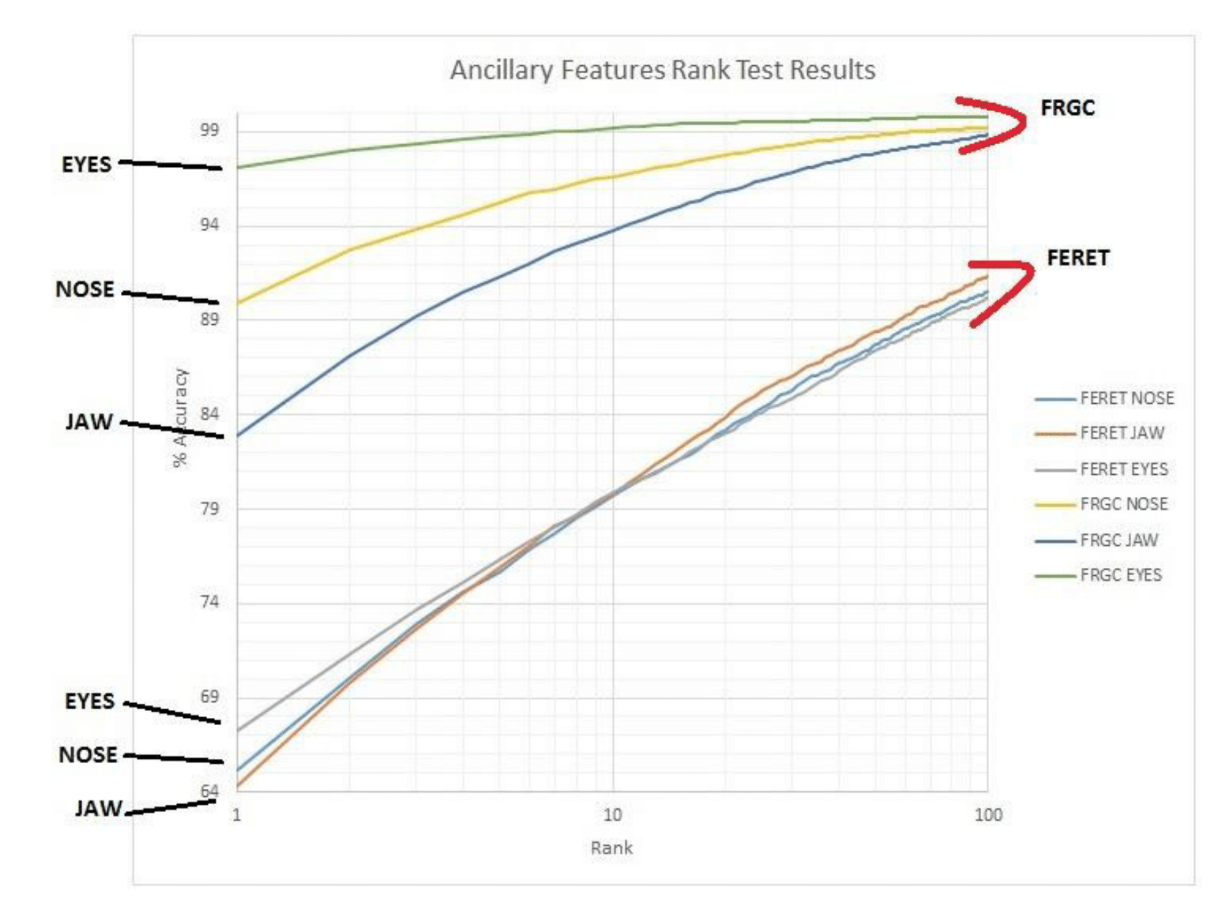

We created an ancillary feature deep neural network for each facial feature biometric and then attempted to match the masked probe images to the unmasked gallery images. To evaluate how well the process worked we modified two well known NIST datasets, the FRGC and FERET sets were chosen. The FRGC set contains approximately 22k front facing images with lighting variation and some expression variation. The FERET data set contains approximately 8k images with a large head pose and some lighting and expression variation (full profiles were removed).

Using the eyes feature for front facing images (the FRGC data set) CyberExtruder’s Aureus 3D achieved a Rank1 accuracy of 97%. Head pose will have a detrimental effect on match scores, and this was demonstrated when we ran the same test on the FERET set which contains highly posed subjects. On the FERET dataset, the eyes feature achieved an 80% Rank 10 despite the presence of large pose.

Both FRGC and FERET were used as a closed set test. For each data set, every person has two or more images. Rank tests were performed throughout while limiting the maximum rank to 100.

Ancillary Features Conclusions

For rank 10 or greater and with a large pose, the jaw feature produces the most accurate biometric and for Rank 10 or better in images that are largely front facing, the eyes produced the most accurate results.

Investigating Facial Recognition Technology?

If you are investigating facial recognition technology as the means to solve an identity management problem and you would like to learn more about CyberExtruder’s software, we encourage you to dig in and get to know us. It’s easy to say you’re the best, the fastest, the most accurate or the industry leader, but it’s another thing to be able to prove it by solving your customer’s challenges and delivering a return on the investment.

CyberExtruder has earned its reputation for having the best technology by utilizing advanced concepts in the development of our facial recognition algorithms, by being highly responsive to client needs, and delivering on time and within budget. Whether you’re an OEM Partner, a Solutions Integrator or an End User, we have the facial recognition software solutions to meet the challenge. If you’re interested in seeing Aureus 3D in action, request a demo today.